- cross-posted to:

- programmerhumor@lemmy.ml

- cross-posted to:

- programmerhumor@lemmy.ml

Electron everywhere.

And analytics. And offloading as much computation to the client, because servers are expensive and inefficiency is not an issue if your users are the ones paying for it.

I saw an ad request with an inline 1.4 MB game. Like, you could fit Mario in there.

The Samsung shop hands out 1.4mb JSON responses for order tracking, with what I estimate 99% redundant information that is repeated many times in different parts of the structure.

Web “Apps” are also quite bad. Lots of and lots of stuff we’re downloading and it feels clunky.

Sometimes that’s bad coding, poor optimization, third party libraries, or sometimes just including trackers/ads on the page.

I vaguely recall a recent-ish article that an average web page is 30mb. That’s right, thirty megabytes.

It’s amazing how much faster web browsing becomes when I run PiHole and block most of it.

Suddenly the TV is pretty snappy, and all browsers feel so much smoother.

And I’m sitting here uneasy thinking how the hell I’m going to compress my map data any further so that my entire web app is no bigger than 2 mb. 😥

No, you need to go further: https://512kb.club/

Oh god, I’m not ready for the trauma and the emotional scars… D:

That’s straight up not true. It’s not even remotely close to that.

Some devs will include a whole library for one thing instead of trying to learn another way to do that thing.

from * import *A whole library which was meant to to 10 things, but you only use one. And that for x libraries

Nowadays libraries are built with tree-shaking in mind, so when it’s time to deploy the app only the code that’s actually used gets bundled.

It’s just that we have to make space for our 5,358 partners and the telemetry data they need.

* legitimate telemetry data

Legitimate interest to train AI

Let me (lemme?) translate this into customer-friendly business language:

Enhanced user experience

That still wouldn’t account for it. The code to collect this is tiny and the data isn’t stored locally. The whole point is for them to suck it up into their massive dataset.

Paypal has 500 mb and just shows a number and you can press a button to send a number to their server.

It’s insane

Check out the apps Hermit and Native Alpha. They make web pages run like an app. I’ve only run into a couple sites where they don’t work right.

Native alpha sounds good since it’s foss and uses vanadium’s webview. Are you still logged in to paypal (any annoying website) a couple of months later. Or does it revoke your rights after a while?

I only use it rarely and I hate providing my info for 5 minutes just to do one transaction.

Dude!! What a badass concept, cannot wait to give this a shot!!

Has to send a number to Apple’s server too! actually not even sure if that’s client side.

You made me check it, and on my android device it’s 337 (just the app). Jesus Christ.

Mine has 660MB with 7MB user data, 15MB cache.

LMAO, he also made me check it.

347 MB for me, no wonder why I am always struggling with storage for my 128 GB phone (with not expandable storage of course), and I don’t even have that many games, even less ROMs 😅

Cheaper & faster development by leveraging large libraries/frameworks, but inability to automatically drop most unused parts of those libraries/frameworks. You could in theory shrink Electron way down by yoinking out tons of browser features you’re not using, but there’s not much incentive to do it and it’d potentially require a lot of engineering work.

64kb should be enough for anyone

is that the size of doom or something lol

Its a reference to an old he said she said quote attributed to bill gates from the 1990’s

“640K ought to be enough for anyone” — Bill Gate

Yeah, though the joke is funny, this is the real answer.

Storage is cheap compared to creating custom libraries.

Also the storage is the cost for the user, and google in the case of play store. So the developers have no incentive to reduce the size.

Storage is cheap on a PC, it’s not cheap on mobile where it’s fixed and used as a model differentiator. They overcharge you so much. Oh, and they removed SD card slots from nearly all phones.

Nah it’s fine. Clean up used apps every once in a while. Base phones have more than enough space.

Yep. Apps are 20x bigger with no new features…that you are using.

Let’s not forget that the graphics for applications has scaled with display resolution, and people generally demand a smooth modern look for their apps.

In the case of normal apps like PayPal graphics shouldn’t be a huge factor since it should be vectorized and there is pretty much no graphics in apps like PayPal.

The issue comes from frameworks.

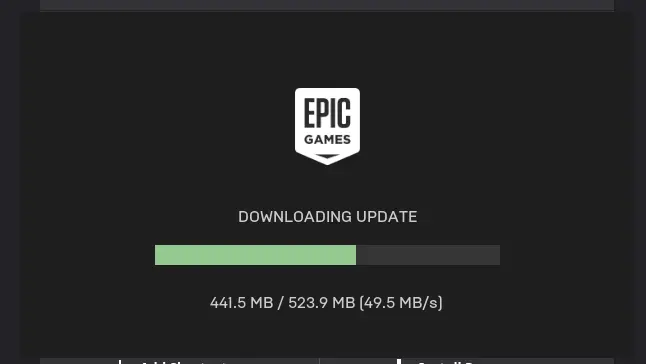

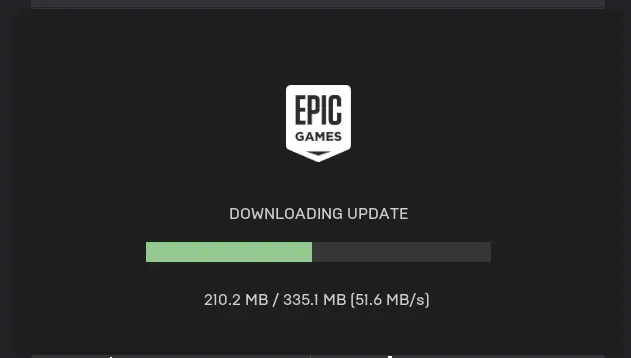

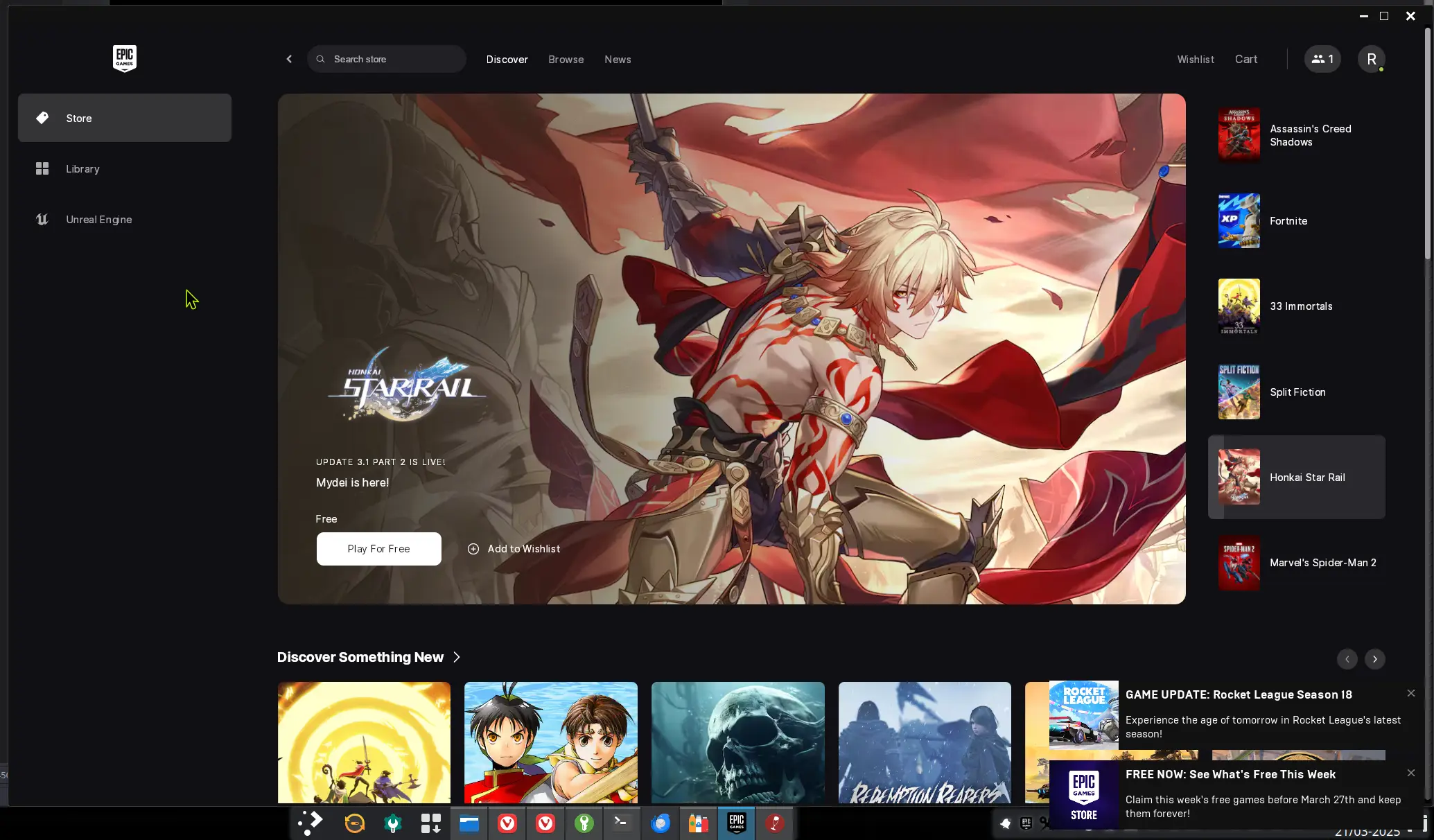

I just updated Epic Games Launcher. BEHOLD:

1st update

2nd update

Almost a gigabyte for a mostly blank interface, wtf.

i have a better one, corsair ICUE. 4gb for a fucking png simulator.

God, I hate Corsair. Not only do you need to download their garbage software to fucking turn off the RGB on a headset, you have to have it running or the RGB will turn on again!

deleted by creator

Thanks for sharing that. My device isn’t listed, but I’d be surprised if Corsair changed how their shit works that much between devices. I’ll give it a try (it supposedly even works on Linux <3)

ironically, i’ve found that razer has particularly good open community support on linux.

It’s actually pretty good. Works, unlike synapse, which is fucking malware that tries to reinstall itself repeatedly.

Usually, instead of having 8-bit art, you have epic songs and very high definition textures. That is a good deal of why.

Textures and songs are not a thing on most apps right? For android, using Kotlin has created much bigger appsize than old java

Kotlin doesn’t have much impact on binary size.

For smaller apps maybe. I’ve seen apps that should take less than 1mb rise to 15mb or so

That’s not due to kotlin.

Why else?

How would I know without looking at the app binary?

Backed devs: sweats

I think the epic songs and 4K textures are missing in my MS Office.

Yeah but they made xlookup, that’s worth a few hundred megabytes

All hail xlookup

That’s weird. Have you tried reinstalling?

Oh, they have new functionality. It’s all in the back end, detailing everything you do and sending it to the parent company so they can monetize your life.

Ads and trackers

Don’t forget poor optimization

That doesn’t make the software take up more space on a drive. Optimizing is likely to result in a slightly larger install that runs more efficiently.

. . . does. Import whole ass library, use one function, once. Probably one of the easiest to implement yourself. Boom, file grew. Repeat.

Lodash wants to know your location

deleted by creator

Bro, just use AI, bro, you don’t need developers, bro, also skip the testing, bro, who is going to hack your SaaS, bro

Just let ai code bro its so much better and more reliable, just does what its told it works so good bro, ai is the future its so smart.

Memory is cheap and data sells enough to many parties. Most apps are just store front for Ads and data collection.

No wonder why open source apps are quite light.

Fucking Chrome/Electron is why.

I honestly wouldn’t mind that if they could all use the exact same runtime so the apps could be a few MB each, but nooooo.

See: Webview2

Unfortunately, it is extremely painful to work with😔 Enjoy rolling your own script versioning and update systems instead of using squirrel et al

Edit: I think Tauri works by targeting this and webkitgtk via their wrapper library, unfortunately I can’t get my coworkers to write rust

Isn’t that just the same pig, just wearing different makeup? I’m not a fan of msedgewebview2.exe allocating 500+ MB RAM just because Teams is open, but maybe that’s Teams fault…

Not quite. instead of a bundled pinned version of electron, it is an arbitrary version of edge’s WebKit fork shared across all programs using it. That means you don’t need to keep multiple copies of the webkit libraries loaded into memory.

That’s not to say that building things on web technology is an efficient use of resources. Even if multiple programs are sharing the webview2 library, they’re still dealing with the fundamental performance and memory problems caused by building an app in JavaScript.

As for why teams is so memory hungry? I would blame Teams.

Discord manages to make a half decent, highly responsive webview app, and that’s with the overhead of having its own separate instance of electron.

EDIT: the original poster was also talking about application binary size, not runtime memory consumption. Application binary size should actually be significantly helped by linking webview to instead of bundling electron.

400mb iphone banking app entered the chat

Is there any alternatives to electron ? And why people’s doesn’t move on to alternatives if electron is huge & heavy resources ?

I mean, Object Pascal was doing the “write once, run anywhere” thing decades ago. Java, too. The former, especially, can make very small programs with big features.

Java (and Object Pascal, I’m assuming) have very old-looking UIs. Discord’s gonna have trouble attracting users if their client looks like a billing system from 2005. Also, what do you do about the web client? Implement the UI once in HTML/CSS/JS, and again in JForms?

So if you’re picking one UI to make cross-platform, and you need a web client, do you pick JForms and make it work on the web? or React and make it work on desktop?

Yea, electron has flaws, but it’s basically the only way to make a truly cross platform native and web app. I would rather take a larger installed size and actually have apps that are available everywhere.

The sad truth is there aren’t enough developers to go around to make sleek native apps for every platform, so something that significantly frees dev time is a great real world solution for that.

I think maybe you’re confused. Java drives a significant percentage of Android apps. It absolutely can do modern UI. I can almost guarantee you’ve interacted with a Java program this year that you never considered.

Pascal is more niche, but it can do modern, too.

Java was doing web clients before the web could and still can. I don’t know much about Delphi’s web stuff, but I know they’ve targeted it for years now.

WASM and transpiling blur the lines, too. LVGL can provide beautiful interfaces on the web as well as platforms Electron could never target, and works with any language compatible with the C ABI.

I’m not saying these strategies are without their own warts, but there are other ways to deliver good experiences across platforms with a ~single codebase in a smaller payload. But mostly nobody bothers because they just reach for Electron. It’s this era’s “nobody ever got fired for picking Intel”.

We need more people working with and on alternatives, not just for efficiency but also for the health of the software ecosystem. Google’s browser hegemony is feasting. Complexity has become their moat, preventing a fork from being viable without significant resources. Mozilla is off in a corner consuming itself in desperation.

A US-based company holds a monopoly over the free web and a hell of a lot of our non-web software. So maybe let’s look for ways to avoid feeding the beast, yes? And we can get more efficient software in the process.

Isn’t jfx still actually using HTML and CSS, though? like it’s cool that the UI logic is in Java, but doesn’t using CSS mean you still need to lug a rendering engine around, even if not a whole browser?

The alternative is “just serve it as a regular website”. It doesn’t need to be an app to do its job. Name a functionality which only exists in electron but not in the standard browser API.

Because companies give zero fucks. They will tell you they need tons of IT people, when in reality they want tons of underpaid programmers. They want stuff as fast and cheap as possible. What doesn’t cause immediate trouble is usually good enough. What can be patched up somehow is kept running, even when it only leads you further up the cliff you will fall off eventually.

Management is sometimes completely clueless. They rather hire twice as many people to keep some poorly developed app running, than to invest in a new, better developed app, that requires less maintenance and provides a better user experience. Zero risk tolerance and zero foresight.

It still generates money, you keep it running. Any means are fine.

Ironically the management that does have a clue often is hamstrung somewhere up the chain.

I’d argue that deploying from one codebase to 3+ different platforms is new functionality, although not for the end user per se.

I wish though that more of the web apps would come as no batteries included (by default or at least as a selectable option), i.e. use whatever webview is available on the system instead of shipping another one regardless of if you want it or not.

But if your tool chain is worth anything the size of each binary shouldn’t be bigger. To oversimplify things a bit: it’s just #ifdefs and a proper tool chain.

In the web development world on the other hand everything was always awful. Every nodejs package has half the world as dependencies…

That’s how a bunch of apps broke when M$ got rid of explorer

There’s lots of valid reasons for this.

Imo the biggest one people don’t account for is this: Dev salaries are incredibly high. if you want fast performance the most optimal way would be to target the platform and use low level native code, so C++ or Swift.

It would cost you like 20x more than just using electron and it will cost you bigly if you have multiple platforms to maintain.

So it turns out having 1 team crunching out an app on electron with hundreds of dependencies is cheaper, naturally that’s what most companies will do.

Don’t want to use electron ? Then it’s kind of the same issue except this time you’re using Java and C# and you have to handle platform specific things on your own (think audio libraries for example). It’s definitely doable but will be more costly than using a cross platform chromium app.

Technically there is no “most optimal”. Optimal is basically best.

Remember that day when GDPR dropped and website suddenly started loading much faster.